Desktop Survival Guide

by Graham Williams

|

DATA MINING

Desktop Survival Guide by Graham Williams |

|

|||

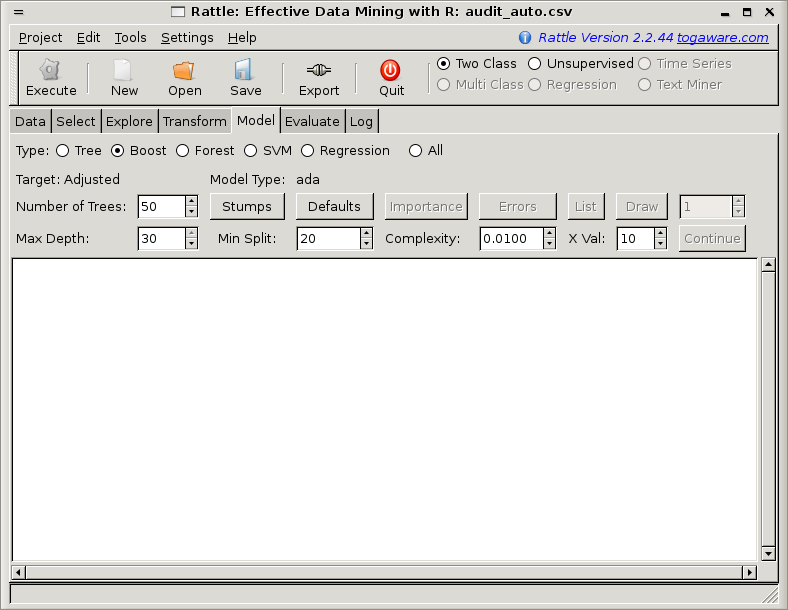

Tuning Parameters |

There are quite a few options exposed by Rattle for boosting our decision tree model. We will explore how these can help us to build the best model.

It is not always clear exactly how many trees we should build for our ensemble. The default for ada is to build 50 trees. But is that enough? The Boost functionality in Rattle allows the ensemble of trees to be added to, so that we can easily explore whether more trees will improve the performance of the model. To do so, simply increase the value specified in the Number of Trees text box and click the Continue button. This will pick up the model building from where it was left off and build as many more trees as is needed to get up to the specified number of trees.

Note that by default ada uses Rarg[]bag.frac=0.5 which means it uses bagging to select a random sample from the population (a 50%) bag in this case. This improves the performance of the resulting model.

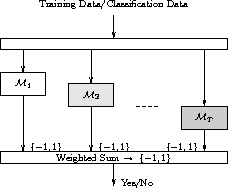

The Boosting meta-algorithm is an efficient, simple, and easy to program learning strategy. The popular variant called AdaBoost (an abbreviation for Adaptive Boosting) has been described as the ``best off-the-shelf classifier in the world'' (attributed to Leo Breiman by (, p. 302)). Boosting algorithms build multiple models from a dataset, using some other learning algorithm that need not be a particularly good learner. Boosting associates weights with entities in the dataset, and increases (boosts) the weights for those entities that are hard to accurately model. A sequence of models is constructed and after each model is constructed the weights are modified to give more weight to those entities that are harder to classify. In fact, the weights of such entities generally oscillate up and down from one model to the next. The final model is then an additive model constructed from the sequence of models, each model's output weighted by some score. There is little tuning required and little is assumed about the learner used, except that it should be a weak learner! We note that boosting can fail to perform if there is insufficient data or if the weak models are overly complex. Boosting is also susceptible to noise.

Copyright © Togaware Pty Ltd Support further development through the purchase of the PDF version of the book.